Event Streams

💡Have feedback for this page? Let us know on the IA Forum.

Event streams are project resources in Ignition that aim to allow users to have a unifying construct for handling asynchronous events, allowing you to filter, transform, and react to those events in various ways. This helps eliminate the complexity of configuring multiple scripts on separate subsystems, making data manipulation more streamlined. Event streams can be created and modified in the Designer.

Unlike other mechanisms that handle data such as SQL databases, event streams are primarily event-driven or subscription-based, meaning no active solicitation of data is needed.

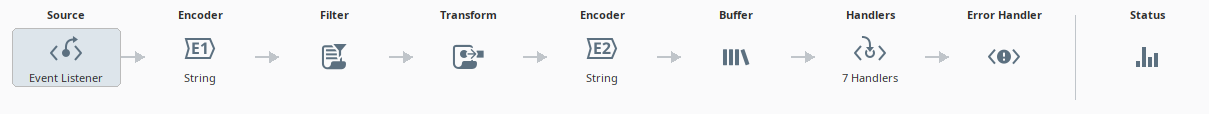

Event Stream Stages

Event streams are made up of several parts, called stages. While some of these stages are optional, such as the Filter and Transform stages, they will help your event stream get the result you want.

Source

The source stage, also known as the event source, is the origin of the data that the user wants to send through the event stream. Sources include:

- Kafka (Requires Kafka module)

- HTTP Endpoint (Requires Web Dev module)

- Event Listener

- Tag Event

See the Types of Sources page for more details about each source.

Some sources may be unavailable unless the corresponding module is installed, such as Kafka for Kafka topics.

Event Objects - Data and Metadata

Source data can be broken down into event objects, which are typically JSON payloads. Event objects consist of:

- Primary data - The primary object produced by the source. Primary data can be any type of Java object, but are most commonly byteArray (byte[]) or String type.

- Metadata - Information describing the event. Metadata is specific to each source, and a description of available fields should be included in the source documentation. Providing metadata in your source is optional.

Elements from an event object's primary data and metadata can be extracted in the handler stage using dot notation and the expression language. See the Handler Expressions section for more information on how to extract these elements, and the Source Data and Metadata page for more details about the elements themselves.

Encoder

The encoder is a stage within the event stream that allows users to encode data into specific formats. There are two encoding stages, the first of which follows the source stage, and a potential second which follows the transform stage (if transforms are enabled). Encoder options include:

- String

- JsonObject

- Byte[]

By default, source data will be encoded as JSON.

The encoder stage also has a table that allows you to change the encoding property from UTF-8 to a different character encoding, such as UTF-16 or BASE_64.

Encoder stages are not substitutes for the transform stage, and should not be used interchangeably.

Additionally, the best encoding type for your event stream will vary depending on what kind of handler you are using.

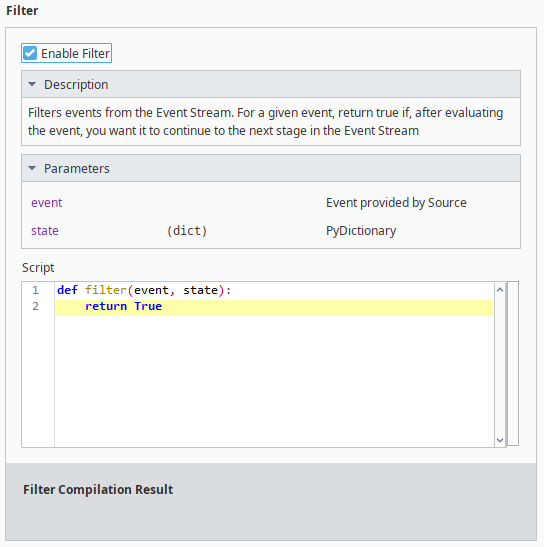

Filter

The filter stage is an optional script that takes the event payload as an argument and returns a boolean. If the script returns false, the event is ignored and not passed down the event stream to the next stage.

The filter script takes two arguments (event and state), both of which can be further broken down.

| Argument | Data Type | Subargument |

|---|---|---|

| event | PyDictionary |

|

| state | PyDictionary |

|

Users can also add their own keys or values to PyDictionary if they want to store some state between filter calls.

Transform

The transform stage is an optional script that takes the event payload from the source (or the filter script if returned True) as an argument and returns a different object. This new object is then used as the event payload going forward in the event stream.

Similar to the filter script, the transform script takes two arguments (event and state), both of which can be further broken down:

- event (PyDictionary)

- data (PyObject) - The data from the event object.

- metadata (PyDictionary) - The metadata from the event object.

- state (PyDictionary)

- lastEvent - The last event seen by the filter, which may or may not have been dropped.

Users can also add their own keys or values to PyDictionary if they want to store some state between transform calls.

The transform stage can often function as data preparation. Users can manipulate the data into the form they want, making it ready for the handler stage to do something with the data.

Buffer

The buffer stage acts as a staging area for events before they are passed to the handlers. Events can be batched together, depending on how the buffer stage's properties are configured. Moreover, if your handlers are already working through a set of events, the buffer stage will not pass along queued events until the events in the handler stage have been resolved. You can configure the following buffer properties.

| Buffer Property | Description |

|---|---|

| Debounce | The amount of time the event stream will wait for another event before passing the current event or batch of events to the handler(s), in milliseconds. This property's value is reset back to the configured value after receiving an event. This means events will be batched together unless the Debounce time is reached, or until the Max Wait property's limit is reached, whichever comes first. Default is 100. |

| Max Wait | The maximum amount of time before an event or batch of events will be sent to the handler(s), in milliseconds. Default is 1000. |

| Max Queue Size | The maximum number of events that will be queued in the buffer stage before the event stream begins dropping events. A value of 0 means that there is no limit. Default is 0. |

| Overflow | Determines which event should be dropped once the Max Queue Size is reached. Options include:

|

Events are dropped, not forwarded, when the Max Queue Size is reached. Events will only be forwarded to handlers if either the Debounce or Max Wait properties are reached and the handler is not currently processing events.

Handler

The handler is the penultimate stage of the event stream and does something with the event object, depending on what kind of handler is set up. Handlers include:

- Kafka (Requires Kafka module)

- Database (Requires SQL Bridge module)

- HTTP (Requires Web Dev module)

- Gateway Event

- Gateway Message

- Logger

- Script

- Tag

See the Types of Handlers page for more details about each handler.

Some handlers may be unavailable unless the corresponding module is installed, such as SQL Bridge for database handlers.

The handler stage itself can consist of multiple handlers, each of which are executed sequentially. You can reorder each handler step to meet your needs.

Handler Expressions

Handler expressions take advantage of Ignition's Expression language. Unlike regular expressions however, handler expressions have a feature unique to event streams by allowing users to pull specific primary data or metadata from the event object.

You can use dot notation encapsulated with curly brackets when attempting to extract elements from an event object within your handler. For example, if a user wanted to extract the topic metadata from a Kafka event, you can use {event.metadata.topic} to extract that metadata. Similarly, if you wanted to extract a specific data element such as a name from a Kafka event, you can use {event.data.name}. See the Source Data and Metadata page for more information on each source's extractable elements.

Common Settings

The following are settings that are available for all handlers.

Enabled

Each handler has an Enabled option, allowing users to toggle the specified handler as enabled or disabled.

Failure Handling

Failure handling defines what behavior should happen if a handler is unable to process an event(s) or throws an exception. The following failure mode behaviors are available.

| Failure Mode | Description |

|---|---|

| IGNORE | The error is logged, and the event stream moves on to the next handler. This also tells the source that the data was handled successfully. |

| ABORT | The error is logged, but the event stream does not move on to the next handler. This also tells the source that the data was not handled successfully. |

| RETRY | The error is logged, and the handler tries to handle the data again, after a specified delay. |

The RETRY behavior can be further configured with the following properties.

| RETRY Property | Description |

|---|---|

| Retry Strategy | Whether the Retry behavior is performed on a fixed or exponential rate. |

| Retry Count | The number of retries allowed for the event handler. Default is 1. |

| Retry Delay | The number of seconds before the handler is allowed to try handling the data again. If the Retry Strategy property is set to exponential, the specified value will be the initial delay, with each subsequent delay set by the Multiplier property. Default is 1. |

| Multiplier | The value by which to multiply the number of seconds specified in the Retry Delay property. For example, a value of 2 will double the number of seconds before each subsequent retry is allowed. Enabled only if the Retry Strategy property is set to EXPONENTIAL. Default is 1. |

| Max Delay | The maximum limit of time in between each subsequent retry in milliseconds, regardless of the Multiplier property. Enabled only if the Retry Strategy property is set to EXPONENTIAL. Default is 1. |

| Retry Failure | The behavioral logic to follow if the Retry Count is reached, but the handler still failed to handle the data. Available options include:

|

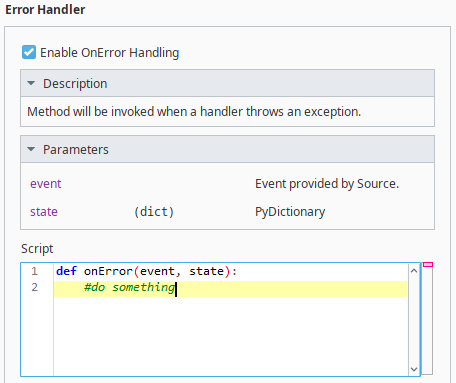

Error Handler

The error handler is the final stage of the event stream and acts as a final catch-all for any potential issues or errors that were not caught by each individual handler's failure handling logic. This stage is invoked when a handler throws an exception and the individual handler's failure handling is set to ABORT. You can define what you want to do with a given error using the scripting area.

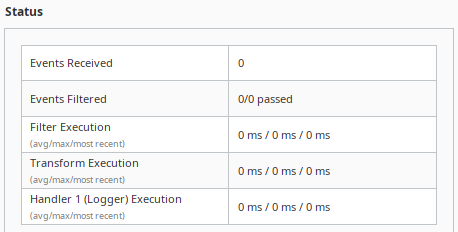

Checking Event Stream Status

To the right of the event stream stages is the Status panel. You can check information such as how many events were received, how many events were filtered, and throughputs for various executions and handlers. The table below describes each status metric.

| Metric | Description |

|---|---|

| Events Received | The number of events received by the event stream. |

| Events Filtered | The number of events that have passed through the filter. |

| Filter Execution | The amount of time an event takes to go through the filter stage. Throughputs measured include the average time of an event, maximum amount of time for a given event, and the time for the most recent event. |

| Transform Execution | The amount of time an event takes to go through the transform stage. Throughputs measured include the average time of an event, maximum amount of time for a given event, and the time for the most recent event. |

| Handler Execution | The amount of time an event takes to go through the specified handler. Additional rows will be added when new handlers are created. Throughputs measured include the average time of an event, maximum amount of time for a given event, and the time for the most recent event. |

Testing Your Event Stream

While creating your event stream, you can use the Test Controls panel to test your event stream. You can toggle the Test Controls panel by clicking on the Test Panels icon on the top right of your Designer window. You can configure your test run with the following properties.

| Test Controls Property | Description |

|---|---|

| Source Object | The source data you want to test your event stream with. You can choose to either test the most recent source object or manually input data to run through your event stream. |

| Encoder | The encoding format to use for your source data test run. You can encode the data in the following formats:

|

| Dry Run | Whether or not the event from Test Controls panel should be stored as a regular event. The test event will still run down the event stream as if it were a regular event, but enabling this property will prevent the test event from becoming an immutable event. |

| Run All | Run the event stream test. You can also choose to run the event stream up to the filter or transform stages by clicking on the drop down menu arrow on the right. |

Viewing Event Stream Metrics in the Gateway

The Gateway Webpage contains a page that presents a collective of projects on the Gateway and their respective event streams, and displays metrics about them. See the Event Streams section of the Services page for more information.